Building Data Acquisition Systems for JUNO: Lessons in Large-Scale Detector Design

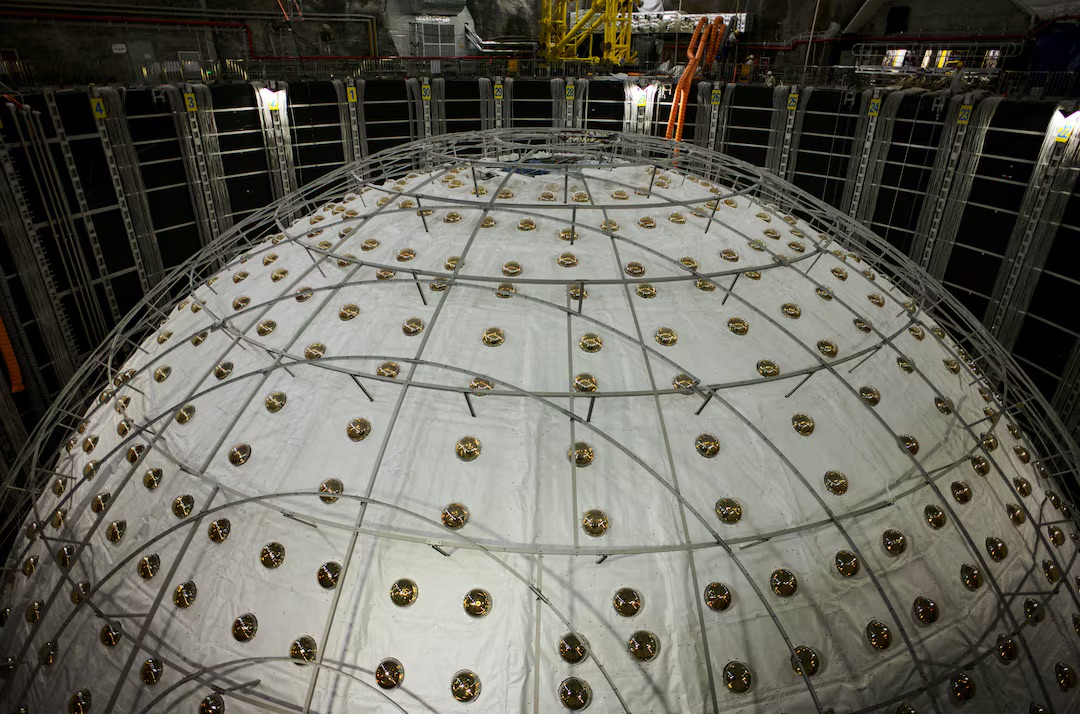

The Jiangmen Underground Neutrino Observatory (JUNO) is a marvel of experimental physics. Located deep underground to shield from cosmic rays, it uses 20,000 tons of liquid scintillator to detect neutrinos from nuclear reactors, the sun, supernovae, and other cosmic sources.

My role in this collaboration focused on developing the Data Acquisition (DAQ) system—the critical software infrastructure that captures, processes, and stores data from thousands of photomultiplier tubes operating simultaneously.

The Scale of the Challenge

JUNO's detector comprises approximately 20,000 photomultiplier tubes (PMTs), each capable of detecting individual photons. When a neutrino interaction occurs, it produces a flash of light detected by hundreds of PMTs within nanoseconds.

The DAQ system must:

- Capture signals from all PMTs simultaneously

- Identify events in real-time amid background noise

- Process data at rates exceeding gigabytes per second

- Store information for later analysis

- Operate continuously for years with minimal downtime

Architecture Design

We designed a multi-tier architecture:

Tier 1: Front-End Electronics

Custom-designed readout boards digitize PMT signals at high rates. These boards must be:

- Fast: Capturing nanosecond-scale events

- Precise: Maintaining timing resolution across thousands of channels

- Reliable: Operating in the challenging underground environment

Tier 2: Event Building

Specialized processors collect data from front-end electronics and assemble complete events. This stage implements:

- Trigger logic: Distinguishing genuine neutrino events from background

- Time synchronization: Ensuring all channels agree on event timing

- Data compression: Reducing storage requirements while preserving physics information

Tier 3: Storage and Distribution

Long-term data storage and distribution to analysis centers worldwide. We implemented:

- Hierarchical storage: Hot storage for recent data, cold storage for archives

- Data quality monitoring: Real-time checks ensuring detector health

- Global distribution: Replicating data to collaboration institutions

Software Development

The software stack combined:

- C++ for performance: Critical paths optimized for speed

- Python for flexibility: Configuration, monitoring, and analysis

- Modern frameworks: Leveraging tools like ZeroMQ for messaging

Real-Time Processing

One of the most challenging aspects was real-time event selection. With events occurring at kilohertz rates, we couldn't store everything. The system needed to:

- Identify interesting events: Neutrino interactions versus background

- Apply sophisticated algorithms: Pattern recognition in microseconds

- Adapt to changing conditions: Detector aging, varying backgrounds

We implemented machine learning algorithms that improved over time, learning to recognize subtlepatterns in the data.

Challenges and Solutions

Timing Synchronization

Achieving nanosecond-level timing across thousands of channels required:

- GPS-disciplined clocks: Providing absolute time reference

- Cable calibration: Measuring and compensating for signal delays

- Continuous monitoring: Detecting and correcting timing drifts

Data Quality

Ensuring data quality involved:

- Automated monitoring: Real-time checks of detector performance

- Alerting systems: Notifying experts of potential issues

- Diagnostic tools: Allowing quick identification of problems

Scalability

The system needed to scale:

- Horizontally: Adding more processing nodes as needed

- Vertically: Handling increased event rates from detector improvements

- Future-proof: Accommodating technologies not yet invented

Lessons Learned

This project taught me invaluable lessons applicable across experimental physics:

Modularity Matters

Designing modular systems allowed us to:

- Test components independently

- Replace failing modules without complete shutdowns

- Upgrade subsystems as technology improved

Documentation is Critical

With hundreds of collaborators, clear documentation was essential. We maintained:

- Technical specifications: Detailed design documents

- User guides: Helping shifts operators run the system

- Developer documentation: Enabling others to contribute

Communication is Key

Working across time zones and institutions required:

- Regular meetings: Video conferences coordinating development

- Shared repositories: Version-controlled code accessible to all

- Clear protocols: Decision-making processes everyone understood

Impact on My Career

The JUNO DAQ project fundamentally shaped my approach to experimental physics. It taught me that modern physics is as much about software and engineering as it is about fundamental theory.

These skills proved invaluable in my later work on LIGO, ETpathfinder, and the Rasnik alignment system. Large-scale detector projects share common challenges, and solutions developed for neutrino physics apply equally to gravitational wave astronomy.

The Future of JUNO

JUNO is now operating, collecting data that will:

- Determine neutrino mass hierarchy: Resolving a fundamental question in particle physics

- Measure oscillation parameters: With unprecedented precision

- Search for exotic physics: Proton decay, supernova neutrinos, and more

The DAQ system we built is making these discoveries possible.

This work was conducted at RWTH Aachen University and Forschungszentrum Jülich (2018-2020)